Picture a popular restaurant on a Saturday night. If there's only one host trying to seat a hundred people, you're going to have a long wait and a lot of frustrated customers. That's exactly what happens to a web application when a single server gets hammered with too much traffic.

An AWS load balancer is like having a team of expert hosts. They intelligently greet incoming web traffic and direct each request to the best available server, making sure no single one gets overwhelmed. This simple concept is the key to keeping your applications fast, reliable, and always online.

Understanding the Fundamentals of Load Balancing in AWS

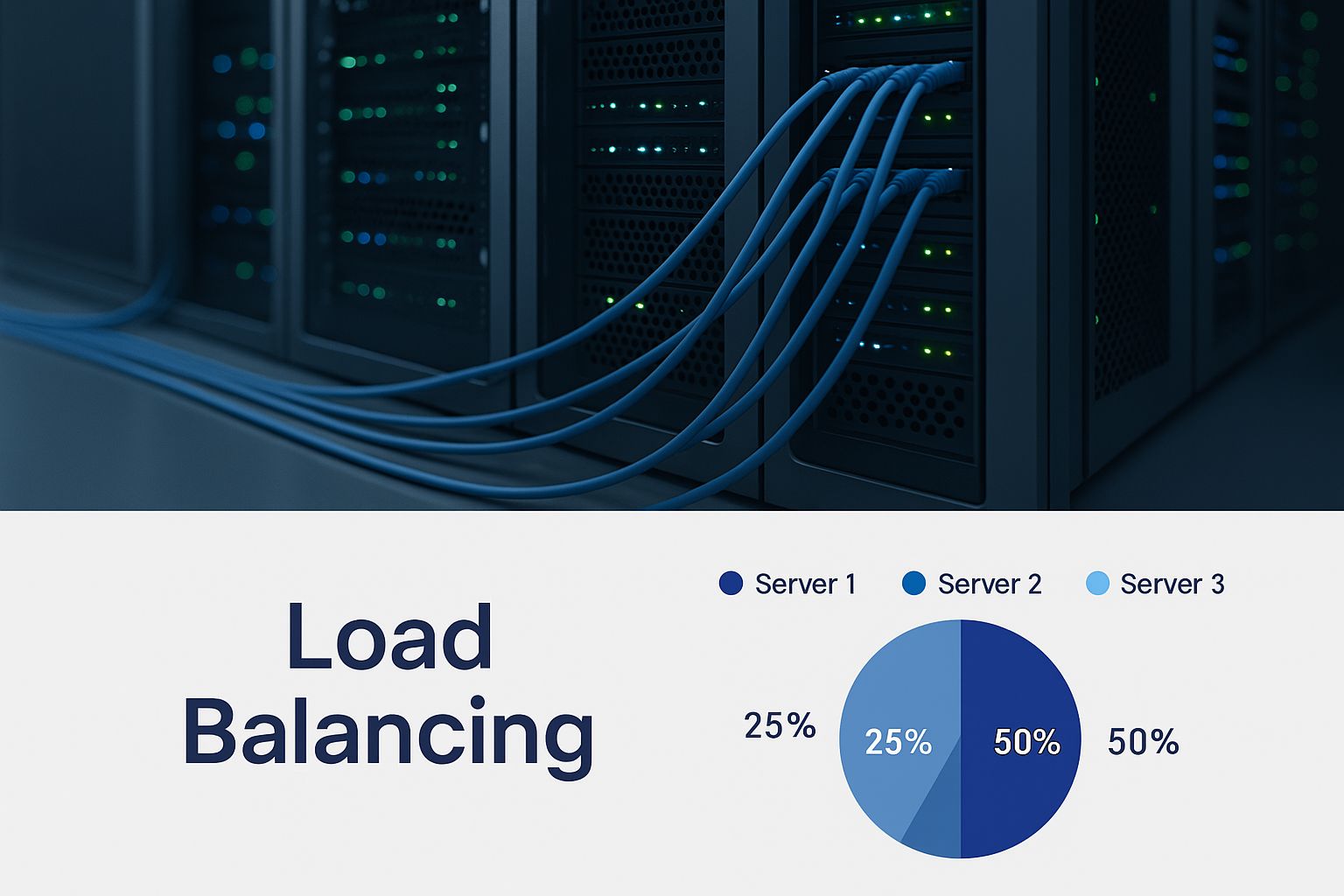

At its core, load balancing in AWS is all about automatically spreading incoming application traffic across multiple targets. These targets could be Amazon EC2 instances, containers, or even specific IP addresses. By distributing the work, you eliminate bottlenecks that would otherwise slow down your application or cause it to crash entirely.

The service that makes all this happen is Elastic Load Balancing (ELB). Think of ELB as the single front door for all your users. It manages the chaotic flow of traffic behind the scenes, ensuring everything runs smoothly. If one of your servers suddenly goes offline, ELB instantly reroutes traffic to the healthy ones. Your users never even notice there was a problem.

Why Is Load Balancing a Cornerstone of Cloud Architecture?

In the cloud, traffic can spike without warning. A successful marketing campaign or a viral social media post can send a flood of users to your application. Without a load balancer, that sudden success could easily take your entire system down.

By spreading the load, you're building a system that can handle anything. The key benefits are pretty clear:

- High Availability: It gets rid of single points of failure. If a server fails, your application stays up.

- Improved Scalability: As your traffic grows, you can just add more servers to the group. The load balancer will start sending traffic their way immediately, no downtime needed.

- Enhanced Performance: Distributing requests lightens the load on each server, which means faster response times for your users.

- Increased Reliability: ELB constantly runs automated health checks on your servers, so it only ever sends traffic to instances that are healthy and ready to respond.

This visual perfectly captures the idea of a load balancer acting as a digital traffic controller.

It’s the central nervous system for any serious cloud infrastructure, directing requests where they need to go with precision and speed.

The Role of AWS Market Leadership

It's no accident that these services are so widely used. As the undisputed leader in cloud infrastructure, Amazon Web Services (AWS) held about 31% of the global market share in the first quarter of 2024.

This dominance means that its Elastic Load Balancing services have been battle-tested at an incredible scale, supporting millions of applications worldwide. You can find more details in this report on AWS market share and its impact.

Choosing the Right AWS Load Balancer

Not all application traffic is created equal. Some requests need smart, content-aware routing, while others just demand raw, unfiltered speed. AWS gets this, which is why they offer a specialized suite of Elastic Load Balancers (ELB), each built for a specific job. Picking the right one is a huge architectural decision that directly impacts your application's performance, resilience, and, of course, your monthly bill.

This choice is more important than ever. AWS Elastic Load Balancer absolutely dominates the market, capturing somewhere between 67% and 75.83% of the global load balancer share. That statistic alone shows how fundamental these tools are for modern cloud applications. If you want to dig deeper into the numbers, Zenduty offers a comprehensive analysis of the competitive landscape.

Making the right call from the ELB family means your infrastructure isn't just working, it's optimized.

Application Load Balancer (ALB) The Intelligent Traffic Director

The Application Load Balancer (ALB) works at the application layer (Layer 7) of the OSI model. Think of it as a smart traffic cop for your web apps. It doesn't just pass traffic along blindly; it actually looks inside each request at the URL path, hostname, and HTTP headers to make clever routing decisions.

This intelligence is what makes the ALB a perfect fit for modern architectures, especially those built with microservices or containers. You can set up a single ALB to handle multiple websites or applications by creating rules that send traffic exactly where it needs to go based on the request's content.

Ideal Use Cases for an ALB:

- Microservices Architectures: Easily route requests to different services based on the URL. For example,

/apigoes to your API service, while/usersgoes to the user management service. - Containerized Applications: Integrates beautifully with Amazon Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS) to manage traffic for dynamic container workloads.

- Hosting Multiple Websites: Use host-based routing to direct traffic for

site-one.comandsite-two.comto different server groups, all from a single load balancer.

The ALB's real superpower is its ability to perform content-based routing. This lets you build flexible, efficient architectures that are simpler to manage and scale, often cutting down on the number of load balancers you need to deploy (and pay for).

Network Load Balancer (NLB) Built for Extreme Performance

When your top priorities are raw speed and handling millions of requests per second, the Network Load Balancer (NLB) is your answer. It operates at the transport layer (Layer 4) and is engineered for ultra-low latency and massive throughput. It handles TCP, UDP, and TLS traffic by forwarding connections straight to your backend targets.

Unlike an ALB, the NLB doesn’t peek at application content. Its job is pure, high-speed connection forwarding, which makes it incredibly efficient for applications where every millisecond counts. It also gives you a static IP address for each Availability Zone, a feature that can be a lifesaver for certain legacy apps or external services that need a stable IP to whitelist.

When to Choose an NLB:

- High-Performance Computing (HPC): Perfect for workloads that require massive, low-latency data processing.

- Online Gaming: Keeps real-time gaming servers running smoothly with rapid TCP or UDP connections.

- Streaming Media: Ensures stable, high-bandwidth connections for applications that stream video or audio.

- IoT Devices: Manages connections from millions of Internet of Things devices communicating over TCP.

The NLB is a specialized tool for when performance is absolutely non-negotiable.

Gateway Load Balancer (GWLB) For Virtual Appliances

The Gateway Load Balancer (GWLB) has a unique and powerful role. It makes it much simpler to deploy, scale, and manage third-party virtual network appliances like firewalls, intrusion detection systems (IDPS), and deep packet inspection tools.

Instead of sending traffic to application servers, the GWLB acts as a transparent "bump-in-the-wire." It directs all traffic through a fleet of virtual appliances for inspection before it continues to its final destination. This setup creates a highly scalable and fault-tolerant security inspection point without needing complex routing changes.

A Nod to the Classic Load Balancer (CLB)

While you can still find it, the Classic Load Balancer (CLB) is a previous-generation tool. It can operate at both Layer 4 and Layer 7 but doesn't have the advanced routing features of the ALB or the extreme performance of the NLB. For any new application, AWS strongly recommends using an ALB or NLB to get the benefits of their superior features, performance, and better pricing models.

Comparison of AWS Elastic Load Balancer Types

To make the decision clearer, it helps to see the different ELB types laid out side-by-side. Each one is engineered for a different layer of the network stack and solves a very different kind of problem.

| Feature | Application Load Balancer (ALB) | Network Load Balancer (NLB) | Gateway Load Balancer (GWLB) |

|---|---|---|---|

| OSI Layer | Layer 7 (Application) | Layer 4 (Transport) | Layer 3 & 4 (Network & Transport) |

| Protocols | HTTP, HTTPS, gRPC | TCP, UDP, TLS | IP (all protocols) |

| Ideal Use Cases | Web applications, microservices, container-based workloads, serverless (Lambda) | High-throughput TCP/UDP workloads, gaming, streaming, IoT, network-level performance | Deploying & scaling virtual appliances like firewalls, IDPS, deep packet inspection systems |

| Routing Rules | Advanced: path-based, host-based, HTTP header, query string, source IP | Basic: Forwards traffic based on port and protocol to target groups | Routes traffic to a target group of virtual appliances as a transparent "bump-in-the-wire" |

| Key Features | Content-based routing, native WAF integration, redirects, fixed-response actions, slow start mode | Ultra-low latency, handles millions of requests/sec, provides a static IP per AZ, preserves source IP | Centralizes security and network inspection, transparent to source/destination, highly scalable & resilient |

| Performance | High performance, optimized for HTTP/S traffic | Extreme performance, built for millions of connections with minimal latency | High performance, optimized for transparently passing traffic through virtual appliances |

Ultimately, your application's architecture and traffic patterns will point you to the right choice. ALBs are the versatile workhorse for web traffic, NLBs are the specialized speed demons, and GWLBs are the go-to for inline security and network inspection.

Configuring Your Load Balancer Architecture

Setting up an AWS load balancer is a bit like assembling a high-performance engine. You have a few critical parts that need to work together perfectly to handle traffic without a hitch. Getting these building blocks right is the first step to building a fault-tolerant system that can grow with your application.

We'll walk through the three main pieces you need to configure: Listeners, Target Groups, and Health Checks. Nailing these settings means your system won't just work, it'll be resilient, secure, and ready for whatever you throw at it.

Listeners: The Front Door for Incoming Traffic

A Listener is the very first thing your user's request hits. Think of it as the host at the front door of a busy restaurant. Its only job is to check for connection requests coming in on a specific protocol and port that you've defined.

For example, a typical web app will have a Listener for standard HTTP traffic on port 80 and another for secure HTTPS traffic on port 443. Once a Listener gets a request that matches its rules, it knows exactly what to do next: pass it off to a specific Target Group.

But Listeners can be more than just simple port-checkers. With an Application Load Balancer (ALB), you can set up smart rules that inspect the request's URL path or hostname before deciding where it should go.

Target Groups: The Destination for Your Requests

A Target Group is simply a collection of your backend resources, like EC2 instances or containers, that are ready to process requests. Sticking with our restaurant analogy, if the Listener is the host, the Target Group is a specific section of tables ready to serve the guests.

You can set up multiple Target Groups, each handling a different type of request. You might have one group of servers for your main website and another that just handles API calls. The Listener rules figure out which group gets the traffic, which is a perfect setup for microservices, as you can scale different parts of your app independently.

The real magic of Target Groups happens when you pair them with AWS Auto Scaling. You can automatically add or remove instances from a group based on traffic, so you always have just enough capacity without paying for idle servers.

Managing these resources is where the cost savings really kick in. Using a scheduler to power down servers when they aren't needed is a simple way to stop wasting money. You can learn more about managing EC2 instances with an automated AWS Instance Scheduler to get a better handle on your budget.

Health Checks: The Vigilant System Monitors

A Health Check is an automated watchdog. The load balancer uses it to constantly monitor the status of every instance in a Target Group. It acts like a manager walking the floor, making sure every server is healthy and ready for work. It does this by sending a tiny request to a specific path on each target, over and over again.

If a server fails to respond correctly after a few tries, the load balancer marks it as "unhealthy." It immediately stops sending traffic to that failing instance and redirects new requests to the remaining healthy ones. This is the absolute cornerstone of a fault-tolerant system.

You have full control over how these checks run.

- Health Check Protocol and Port: The protocol (like HTTP or TCP) and port the load balancer uses for the test.

- Health Check Path: For HTTP/S checks, the specific URL to ping (e.g.,

/healthcheck). - Healthy/Unhealthy Threshold: How many successful or failed checks it takes to change a target's status.

- Timeout: How long the load balancer waits for a response before calling it a failure.

These settings let you decide exactly how fast your system reacts to problems, which is key to minimizing downtime and keeping users happy.

Unifying the Architecture with Key Settings

Beyond these three core components, two other settings pull everything together: security groups and cross-zone load balancing. Security groups are essentially virtual firewalls that control what traffic is allowed to and from your load balancer and backend instances. A common setup is to only allow traffic from the load balancer to your targets, creating a simple but effective security perimeter.

Cross-zone load balancing is a must-have feature for high availability. When it's turned on, the load balancer spreads traffic evenly across all instances in all of your enabled Availability Zones. If you don't enable it, traffic only gets distributed within the zone it arrived in. Turning this on means a failure of an entire Availability Zone won't crash your application, as traffic will automatically shift to the healthy zones. For Application Load Balancers, this is on by default, a clear sign of how critical it is for modern cloud design.

Securing and Optimizing Your Load Balancer

A well-configured load balancer does a lot more than just point traffic in the right direction. Think of it as a performance engine and a security shield, standing as the first line of defense for your application. Fine-tuning your setup means you can handle massive traffic spikes gracefully while protecting your infrastructure from common threats.

This process really boils down to two equally important goals. On the performance side, it’s all about preparing for the unexpected and keeping an eye on the vital signs. For security, it’s about creating a hardened perimeter that filters out bad actors before they even get a chance to knock on your server's door.

Bolstering Security at the Edge

Your load balancer is the perfect spot to centralize and enforce your security policies. By handling these tasks at the very edge of your network, you simplify your backend architecture and get consistent protection across all your servers.

One of the most effective moves you can make is integrating AWS WAF (Web Application Firewall) with your Application Load Balancer. This service is a powerful filter, inspecting incoming web traffic and blocking common attack patterns like SQL injection and cross-site scripting. Best of all, it lets you create custom rules to shield your application from known vulnerabilities.

Another critical security function is SSL/TLS termination. Instead of juggling complex encryption certificates on every single backend server, a real headache, you can offload this entire task to the load balancer.

- Centralized Certificate Management: You install and manage your SSL/TLS certificates in one place, using AWS Certificate Manager (ACM). This makes renewals a breeze and dramatically reduces the risk of an expired certificate causing an outage.

- Reduced Server Overhead: Your backend instances are freed from the computationally expensive work of encrypting and decrypting traffic. This lets them focus on what they do best: running your application.

- Enhanced Security: All traffic between the client and the load balancer is strongly encrypted, protecting sensitive user data while it's in transit.

Finally, don't forget about properly configured security groups. These act as virtual firewalls, controlling inbound and outbound traffic. A common best practice is to set up the security group for your backend instances to only accept traffic from the load balancer's security group. This effectively creates a private, secure network perimeter.

Fine-Tuning for Peak Performance

Performance optimization is all about building a system that's both fast and resilient. Your load balancing strategy needs to account for sudden traffic shifts and give you the visibility to diagnose issues before your users ever notice them.

Preparing for massive traffic spikes is crucial. While AWS load balancers are built to scale automatically, you can "pre-warm" them by contacting AWS support if you're expecting a huge event, like a product launch. This ensures the capacity is ready to go ahead of time.

For continuous resilience, AWS offers features like zonal autoshift. This capability can automatically shift your workload’s traffic away from an Availability Zone when AWS identifies a potential issue, providing a powerful, automated recovery mechanism without any manual intervention.

Monitoring the right metrics is the key to maintaining performance. Amazon CloudWatch integrates directly with Elastic Load Balancing, offering a dashboard packed with vital statistics.

- HealthyHostCount / UnHealthyHostCount: These are your most direct indicators of your backend fleet's health. Setting up alarms on

UnHealthyHostCountcan alert you to failing instances immediately. - TargetResponseTime: This metric tracks how long it takes for a request to leave the load balancer and get a response from a target. Spikes here often point to an application-level problem.

- HTTPCode_Target_5XX_Count: A sudden increase in 5xx server error codes is a clear signal that your backend application is struggling to process requests.

By setting up CloudWatch alarms for these key metrics, you can proactively identify and respond to performance issues. This level of observability transforms your load balancer from a simple traffic cop into an intelligent component of a high-performing, resilient, and secure application.

Optimizing Your AWS Load Balancer Costs

Cloud costs can feel like a tangled mess, but getting a handle on your AWS load balancing bill doesn't have to be. It really just comes down to knowing what you're paying for and where the easy wins are for savings. If you focus on a few key areas, you can bring your monthly spend down quite a bit without touching performance or availability.

Elastic Load Balancing pricing is built on two main things. First, there's a straightforward hourly charge for every load balancer you have running. Second, you're billed for Load Balancer Capacity Units, or LCUs. Think of LCUs as a pay-as-you-go metric that scales with how hard your load balancer is working.

Understanding the Key Cost Drivers

To really manage costs, you need to know what's pushing your LCU consumption up. AWS looks at four different dimensions and bills you for whichever one you use the most: new connections, active connections, processed bytes (your bandwidth), or rule evaluations.

For an Application Load Balancer, the big factors are:

- New Connections: How many new connections are being made every second.

- Active Connections: The total number of connections open at any given time.

- Processed Bytes: The amount of data flowing through the load balancer.

- Rule Evaluations: This is the number of rules processed multiplied by your request rate. The more complex your routing rules, the higher this cost can climb.

This pricing model really rewards efficiency. It's no surprise the cloud load balancer market is exploding, it was projected to hit USD 10.5 billion in 2025 and is now forecasted to rocket past USD 50 billion by 2035. That's a huge jump, showing just how essential this piece of infrastructure has become. You can discover insights into the cloud load balancer market and see the trends for yourself.

Practical Tips for Cost Reduction

Knowing what drives costs is only half the job. Now you have to put that knowledge into action with some practical strategies to keep your bills from getting out of hand. A few small tweaks can add up to big savings.

One of the quickest wins is simply to choose the right load balancer for the job. If your app just needs basic TCP passthrough, a high-performance Network Load Balancer could be much cheaper than a feature-heavy Application Load Balancer. Always match the tool to the task.

Next, it's time to eliminate unused resources. You'd be surprised how often idle or "zombie" load balancers are left running in an account, quietly racking up charges every month. Make it a habit to regularly audit your environment and shut down anything that isn't actively serving traffic.

By consolidating services, you can reduce both management overhead and direct costs. A single Application Load Balancer can serve multiple applications or microservices using path-based or host-based routing, which is far more efficient than deploying a separate load balancer for each service.

Finally, you need to become friends with your AWS Cost and Usage Reports. These reports give you the nitty-gritty details on your LCU consumption, showing you exactly which load balancers cost the most and why. This data-first approach lets you make smart decisions and find savings you might have otherwise missed. For a deeper look at this, check out our guide on essential AWS cost management tools to reduce cloud spend.

Building a Scalable AWS Infrastructure

Think of effective load balancing as the foundation of any modern cloud architecture. It's what transforms a simple setup into a resilient, high-performing system that can actually scale. It’s not just some technical component you tack on; it's a strategic asset for building applications that can handle wild traffic swings on AWS. The real key is understanding that different kinds of traffic require different tools.

For instance, choosing between an Application Load Balancer (ALB) for smart, content-aware routing and a Network Load Balancer (NLB) for raw, high-speed performance is a critical decision. If you match the right ELB type to your specific workload, you'll hit that sweet spot of optimal performance without burning cash. This idea of matching the tool to the task is a core principle of good cloud design.

A Strategic Asset for Growth

You absolutely have to implement best practices for performance, security, and cost control right from the start. This isn't negotiable. This means using health checks to keep things reliable, integrating AWS WAF to lock down security, and regularly auditing your costs to cut out waste. Getting the architecture and oversight right are essential parts of effective cloud computing management services, making sure your infrastructure is actually helping your business grow.

Ultimately, you need to see your load balancing aws setup as a dynamic part of your infrastructure. It's the central nervous system that provides the high availability and scalability you need for growth, ensuring a smooth experience for your users no matter how much demand spikes.

As cloud tech keeps evolving, traffic management is only going to get smarter. We're already seeing features like zonal autoshift, which automatically steers traffic away from failing availability zones. This is just the beginning of a future where our infrastructure is increasingly self-healing and resilient by design.

AWS Load Balancing FAQs

Working with AWS load balancing brings up a few common questions, especially when you're first getting your sea legs. Getting these concepts down is crucial for building a solid, cost-effective architecture. Let's break down some of the most frequent queries we hear.

Application Load Balancer vs. Network Load Balancer: What's the Real Difference?

This is probably the most common question, and the answer comes down to what layer of the network they operate on. Think of it like this:

An Application Load Balancer (ALB) works at Layer 7, the application layer. This means it's smart enough to understand HTTP and HTTPS traffic. It can actually look inside the request and make routing decisions based on things like the URL path (/images vs. /api) or the hostname (blog.yoursite.com vs. shop.yoursite.com). It's all about content-aware routing.

A Network Load Balancer (NLB), on the other hand, is a Layer 4 workhorse. It deals with the transport layer, TCP and UDP traffic, and it is built for one thing: raw, blistering speed. It doesn't care about the content of the packets; its job is to forward them to a target as fast as humanly possible, making it perfect for high-throughput, low-latency workloads.

How Do Load Balancers Handle Server Failures?

This is where the magic of high availability comes in. AWS load balancers are constantly running automated health checks, pinging each server in their target group to make sure it's alive and well.

If a server suddenly stops responding to these checks, the load balancer doesn't wait around. After a couple of failed attempts (you can configure this), it immediately flags that instance as "unhealthy" and yanks it out of the rotation. All new traffic is instantly redirected to the remaining healthy servers. This failover is completely seamless to your users.

This automatic health monitoring and traffic rerouting is the secret sauce for eliminating single points of failure. It’s what keeps your application online and your users happy, even when a server goes down in the middle of the night.

Can I Use One Load Balancer for Multiple Websites?

Absolutely, and you definitely should! This is one of the most powerful and money-saving features of the Application Load Balancer (ALB).

An ALB uses something called content-based routing. You can set up simple rules that tell it where to send traffic. For example:

- Any request for

www.website-one.comgets sent to the servers for that site. - Traffic for

api.website-two.comis routed to a completely different group of API servers.

By consolidating traffic for multiple domains onto a single ALB, you dramatically simplify your infrastructure, make SSL certificate management a breeze, and, most importantly, cut down on the number of load balancers you have to pay for each month.

Stop wasting money on idle cloud resources. CLOUD TOGGLE helps you cut AWS and Azure costs by automatically scheduling your servers to turn off when they aren't needed. Start your 30-day free trial and see how much you can save.