The fundamental choice in scaling boils down to one question: do you make one server more powerful, or do you add more servers to handle the load? Vertical scaling (scaling up) tackles the first, think of upgrading a PC with extra RAM or a faster CPU. Horizontal scaling (scaling out) takes the second path, imagine hiring more people for a project rather than squeezing more tasks onto a single person’s plate. Your decision hinges on whether you need raw power in one machine or a wider distribution of traffic.

Core Concepts Of Horizontal And Vertical Scaling

When user demand starts to outpace your infrastructure, you face a critical fork in the road. Should you pump resources into the existing server, or spin up new instances to share the work? This choice shapes performance, cost and fault tolerance.

Scaling Up: Boosting One Machine’s Muscle

Scaling up means enhancing a single node. You replace or augment hardware:

- CPU: Add cores or bump clock speeds

- RAM: Increase memory to handle more processes

- Storage: Move to faster SSDs or larger volumes

In practice, this is often the quickest way to relieve pressure. There’s no need to re-architect your application, just slot in more resources. That said, you’ll eventually hit physical or budget limits on how big a single box can get.

Scaling Out: Expanding Your Server Fleet

Scaling out spreads the workload across multiple servers:

- Additional Nodes: Launch new instances

- Load Balancer: Route traffic evenly

- Shared Storage: Sync state between machines

This approach embraces distributed design. For example, e-commerce sites often spin up extra web servers when traffic spikes. While the initial setup can be more involved, think configuration, networking and orchestration, it pays off when you need high availability and flexible growth.

At its core, scaling is about elasticity. An application that bends with traffic surges without breaking is the ultimate goal.

Below is a concise overview to help you spot the key distinctions at a glance.

Key Differences Between Scaling Methods

This table provides a high-level summary of the core differences between the two scaling methods, serving as a quick reference for readers.

| Attribute | Horizontal Scaling (Scale-Out) | Vertical Scaling (Scale-Up) |

|---|---|---|

| Methodology | Add more machines to the resource pool | Boost power (CPU, RAM) of one machine |

| Primary Goal | Distribute workload across nodes | Increase capacity of a single node |

| Complexity | Higher, requires load balancing and orchestration | Lower, just upgrade one server |

| Failure Impact | Fault tolerant, one node can go down without downtime | Single point of failure |

That snapshot should help you decide at a glance which method fits your scenario.

Comparing Performance and Architecture Trade-Offs

When you choose between scaling horizontally or vertically, you're not just picking a technology, you're making a fundamental decision that shapes your entire system's architecture and performance. Each path comes with its own set of trade-offs, directly impacting everything from reliability and complexity to how gracefully your application handles a sudden traffic spike. Getting this choice right is crucial for building a solid, efficient infrastructure.

Right away, you see a huge difference in fault tolerance. Horizontal scaling is built for high availability from the ground up. By spreading the workload across a fleet of independent servers, it naturally eliminates any single point of failure. If one machine goes down, traffic is automatically shuffled over to the healthy ones, and your users never even notice. Service continues uninterrupted.

Vertical scaling is the polar opposite. It puts all its eggs in one basket, concentrating every bit of processing power into a single, massive server. While this gives you incredible raw power for heavy-duty tasks, it’s also a massive vulnerability. If that one machine fails, your entire application goes down with it. There’s simply no built-in backup.

Implementation Complexity and Throughput

Getting started with each model feels completely different. Vertical scaling is often the simpler route, at least in the short term. It usually just means upgrading a server's CPU, adding more RAM, or swapping in faster storage. It’s a hardware boost that requires few, if any, changes to your application code. The logic stays the same; it just runs on a more powerful machine.

Horizontal scaling, on the other hand, forces you to think about distributed systems from day one. Your applications have to be designed to be stateless or have their state managed externally. This approach also brings a few extra components into the mix that are non-negotiable for it to work.

- Load Balancing: You absolutely need a load balancer to intelligently spray incoming requests across all your servers. This prevents any single machine from getting slammed.

- Node Communication: The servers in your cluster need a way to talk to each other to sync up data and maintain a consistent state across the board.

- Orchestration: You’ll need tools to manage the lifecycle of these servers, automatically adding more when traffic surges and removing them when things quiet down.

The core trade-off is pretty clear: vertical scaling gives you simplicity and raw power but sacrifices resilience. Horizontal scaling delivers rock-solid availability and flexibility, but you pay for it with greater architectural complexity.

Figuring out how to manage traffic is a huge piece of the puzzle. For anyone building on a major cloud platform, getting this right is foundational. You can dive deeper into effective strategies by checking out our guide on load balancing in AWS.

Throughput and Performance Limits

When you look at pure throughput, horizontal scaling has a ceiling that’s practically infinite. You can just keep adding more and more commodity servers to the pool to handle ever-growing demand. It’s the go-to model for massive web applications, microservices, and big data processing, where distributing the load is the only sane way to keep up. Given that studies show downtime can cost businesses an average of $12,900 per minute, the resilience of horizontal scaling isn't just a technical benefit, it's a critical business advantage.

Vertical scaling, however, will always hit a wall. There’s only so much CPU and RAM you can cram into a single server. Once you reach the top-tier hardware, the costs start to grow exponentially while the performance gains get smaller and smaller. It quickly becomes an unsustainable strategy for any application that’s growing fast.

Analyzing the Financial Impact of Your Scaling Choice

Every infrastructure decision eventually circles back to one thing: cost. When you're weighing horizontal against vertical scaling, you're not just picking a technical path. You're committing to a financial strategy that will shape your budget from day one and for years to come. Getting this right is absolutely critical for sustainable growth.

On the surface, vertical scaling can look like the more budget-friendly route. Upgrading a server with more CPU or RAM feels like a straightforward, one-off purchase. But that initial simplicity can be misleading, especially as your needs grow.

The True Cost of Scaling Up

The financial model for vertical scaling is a classic case of diminishing returns. As you start chasing high-performance hardware, the price for each little boost in power skyrockets. The most powerful servers carry a massive premium, and you can easily find yourself locked into a single vendor just to get the top-tier components you need.

This approach also comes with hidden operational price tags. A single, monolithic machine is a single point of failure. Any downtime means lost revenue, and keeping that one server at 100% uptime is a constant, expensive battle.

Vertical scaling ties your costs to the premium market for high-end hardware. Horizontal scaling, in contrast, aligns your spending with the affordable and competitive market for commodity servers.

Leveraging Cloud Elasticity with Horizontal Scaling

This is where horizontal scaling really shines, particularly in a cloud setup. It aligns perfectly with the pay-as-you-go model that cloud providers have built their businesses on. Instead of sinking a huge upfront investment into one beast of a machine, you spread that cost across multiple smaller, more affordable commodity servers.

This model gives you incredibly fine-grained control over your spending. You can spin up or shut down instances in real-time as demand fluctuates, which means you only pay for what you’re actually using. This elasticity is the cornerstone of smart financial management in the cloud. For a deeper dive, check out our guide on cloud cost optimization.

From a pure cost perspective, vertical scaling might seem cheaper at the start, but it gets exponentially more expensive as demand ramps up. Horizontal scaling, on the other hand, breaks down the cost into manageable pieces that can be turned on or off, saving you money during quiet periods. You can explore more of these cost dynamics in this detailed comparison of vertical vs horizontal scaling on cockroachlabs.com.

Picking the Right Scaling Strategy for the Job

Knowing the difference between scaling up and scaling out is one thing, but seeing how these strategies actually perform in the wild is what really matters. The best choice almost always boils down to your application's architecture and what you need it to do. Some systems are just naturally built for one approach over the other.

Looking at these real-world examples makes it clear there's no single right answer. It’s a strategic decision shaped by your system's design, whether you're working with an old-school monolithic beast or a modern, distributed microservices setup.

When to Scale Vertically

Scaling up is still a powerful and sensible choice for certain types of applications, especially those that weren't designed to be spread out. Its simplicity makes it the obvious solution in a few key situations.

Here are a few scenarios where beefing up a single server is the most logical move:

- Stateful Applications: Think of systems that have to remember user session data, like a real-time gaming server or an e-commerce shopping cart. Spreading that kind of state across multiple machines is a complex headache. Vertical scaling sidesteps all that synchronization drama by keeping everything on one powerful machine.

- Legacy Monolithic Systems: A lot of older software was built as a single, tightly-knit unit. Trying to rewrite a monolith for horizontal scaling can be a massive, budget-draining project. In these cases, just adding more CPU and RAM to the existing server is a much faster and more cost-effective fix.

- High-Performance Databases: Some traditional relational databases, especially those churning through complex transactions or massive joins, run best when all the data is in one place to keep latency super low. Scaling up the database server gives you the raw horsepower needed for these intense jobs without the drag of network communication.

Choosing vertical scaling often comes down to managing complexity. For applications that are inherently difficult to break apart, strengthening the existing foundation is the most pragmatic path forward.

Vertical scaling really shines with legacy or monolithic applications that you can't easily distribute. On the other hand, horizontal scaling is the king of stateless, microservices-based, and containerized applications that see big swings in demand or serve users in different regions. Distributing the workload across multiple nodes gives you fault tolerance, redundancy, and the ability to roll out updates with zero downtime. For a closer look at these application types, check out this in-depth analysis of scaling strategies.

When to Scale Horizontally

Horizontal scaling is the backbone of pretty much every modern, large-scale web service. It’s designed for resilience, flexibility, and handling unpredictable traffic spikes without breaking a sweat.

You'll see this approach everywhere in architectures that were designed from the get-go to be distributed:

- Microservices Architectures: Applications built from small, independent services are a perfect match for horizontal scaling. You can scale just one part of the system, like the payment service or user login, based on its specific demand, without touching anything else. It's efficient and targeted.

- Large-Scale Stateless Web Applications: Picture massive e-commerce sites or social media feeds. These platforms handle millions of requests that don’t rely on saved session data. Being stateless means they can spin up new servers or shut them down on the fly to match traffic, keeping the user experience smooth even when things go viral.

- Big Data Processing: Frameworks like Hadoop and Spark were literally built to chop up huge data processing jobs and spread them across hundreds or even thousands of cheap servers. This is horizontal scaling in its purest form, and it's what makes analyzing petabytes of data possible.

How to Choose the Right Scaling Strategy for Your Needs

Picking the right scaling strategy is more than just a technical checkbox, it's a core decision that dictates your application's resilience, budget, and future. To move past a simple "this vs. that" comparison, you need to get real about your system's architecture, traffic patterns, and long-term business goals. The best approach is almost always found by looking at how these factors connect.

Answering a few key questions will bring instant clarity and guide you down the most logical path. A solid assessment of your specific needs ensures you’re building an infrastructure that’s not just powerful today, but ready for whatever comes next.

Evaluating Your Application Architecture

First thing's first: what does your application actually look like under the hood? Is it a tightly-wound monolith, or is it built from distributed, independent microservices? The answer to this one question pretty much sets the stage for your scaling options.

Monolithic applications, where every component depends on the others, are notoriously difficult to spread across multiple servers. Their stateful nature and tangled logic make them a perfect candidate for vertical scaling. On the other hand, stateless microservices are literally designed to be distributed, making horizontal scaling the natural, almost obvious, choice.

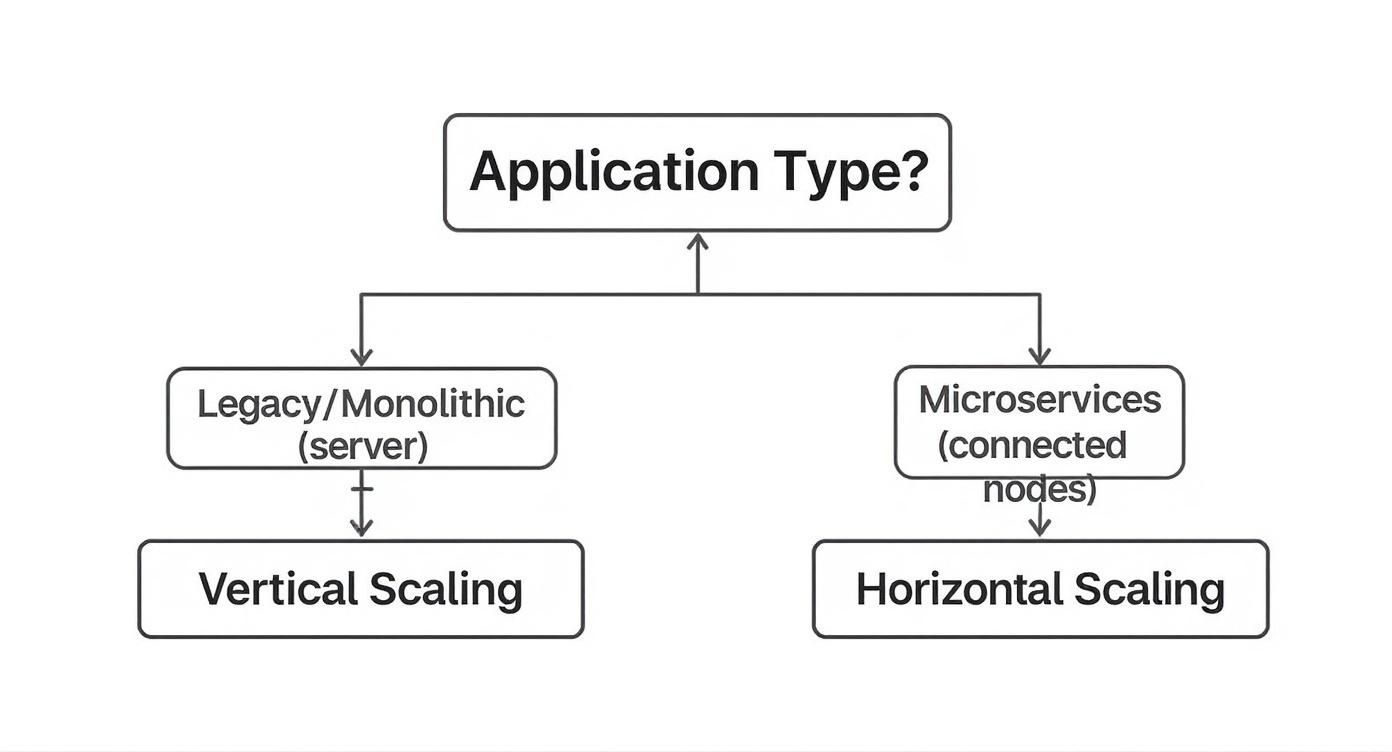

This decision tree shows how your application's design directly points to one path or the other.

The takeaway here is that your current architecture creates both constraints and opportunities for how you can grow.

Considering Traffic Patterns and Growth Projections

How does your application handle incoming demand? Do you see predictable peaks and valleys every day, or are you dealing with sudden, massive traffic spikes that come out of nowhere? Nailing this down is critical for picking a cost-effective strategy.

- Predictable Workloads: If your traffic is steady and growth is gradual, vertical scaling is a straightforward way to add more horsepower without a major re-architecture.

- Volatile Traffic: For apps with unpredictable demand, like an e-commerce site during a flash sale, horizontal scaling gives you the elasticity to add and remove servers in real-time. This is how you avoid paying for capacity you aren't using.

The ultimate goal is to match your infrastructure capacity as closely as possible to real-time demand. A strategy that allows for rapid, automated adjustments is often the most efficient.

Of course, managing this infrastructure effectively is key. For teams looking to get a better handle on this, exploring professional cloud computing management services can provide the expertise and tools needed to nail both performance and cost.

Embracing a Hybrid Approach

The debate over horizontal vs. vertical scaling isn't always an either/or fight. In many modern cloud setups, the smartest strategy is actually a hybrid one. This approach involves vertically scaling individual instances to an optimal size, then horizontally scaling by adding more of those perfectly-sized nodes to your cluster.

This "scale up, then scale out" method truly delivers the best of both worlds. You get the raw performance boost of vertical scaling combined with the incredible flexibility and resilience of horizontal scaling. It creates a system that's both powerful and tough to break, a balanced approach that is often the secret to long-term success.

Frequently Asked Questions

As you get deeper into system design, a few common questions about horizontal vs. vertical scaling always seem to pop up. Let's tackle them head-on to clear things up.

Can You Use Horizontal and Vertical Scaling Together?

Absolutely. In fact, most modern cloud architectures rely on a hybrid strategy. It's not an either/or decision; it's about finding the right balance.

The typical approach is to first scale vertically to find the "sweet spot" for your individual servers, the most efficient size for performance and cost. For example, your team might find that a server with 16 vCPUs and 64GB of RAM is the perfect building block for your main workload. Once you have that ideal unit, you scale horizontally by adding or removing more of them as traffic demands.

This combination gives you the best of both worlds: the raw horsepower of vertical scaling and the nimble flexibility of horizontal scaling.

A hybrid approach stops you from overspending on massive, underused instances while still giving you the elasticity to handle sudden traffic surges. Think of it as finding your perfect Lego brick, then using as many as you need.

Is One Scaling Method Better for Databases?

This really comes down to the kind of database you're running. There’s no single "best" answer.

Traditional relational databases, like a standalone MySQL or PostgreSQL instance, are often stateful and weren't originally designed to be spread across multiple machines. For these, vertical scaling is usually the most straightforward and practical path. You just give the single machine more power.

But the database world has changed. Modern distributed databases like Cassandra or CockroachDB, and even clustered SQL databases, were built from the ground up for horizontal scaling. They are designed to seamlessly spread data and queries across a fleet of nodes. This architecture delivers a level of availability and scale that one giant machine could never match, making it a much better fit for large, mission-critical applications.

What Is Autoscaling and How Does It Relate to This?

Autoscaling is simply the automation that adjusts your computing resources in response to real-time demand. It's the engine that powers both scaling methods without needing someone to manually intervene.

-

Horizontal Autoscaling: This is what most people think of when they hear "autoscaling." It automatically adds or removes instances from a pool. It’s perfect for handling unpredictable traffic, like a web server getting slammed after a marketing email goes out.

-

Vertical Autoscaling: This automatically changes the resources (like CPU or memory) of an existing machine. It's less common for instant, on-the-fly changes because it can sometimes require a reboot. However, it's used effectively by platforms like Kubernetes with its Vertical Pod Autoscaler (VPA). The VPA is great for "right-sizing" workloads over time, making sure they always have the resources they need without being wasteful.

Ready to stop wasting money on idle cloud servers? CLOUD TOGGLE makes it easy to schedule automatic shutdowns for your AWS and Azure VMs, cutting costs without sacrificing control. Start your 30-day free trial and see how much you can save at https://cloudtoggle.com.