Trying to get a handle on your Amazon S3 storage costs can feel a bit like reading a phone bill from the 90s: confusing and full of surprises. But it’s simpler than it looks. Instead of one flat fee, S3 breaks down its pricing into a few key parts, which is great because you only pay for what you actually use.

Demystifying Your Amazon S3 Bill

Think of Amazon S3 like a high-tech, pay-as-you-go storage unit for all your digital files. Your total monthly bill isn't just about how much space your files take up; it's also about how often you access them and where you send them.

Getting a clear picture of these core components is the first real step toward managing your cloud spend. Once you understand them, you can avoid those "uh-oh" moments when the bill arrives and start making smart decisions to lower your costs.

Primary Drivers of Your Amazon S3 Bill

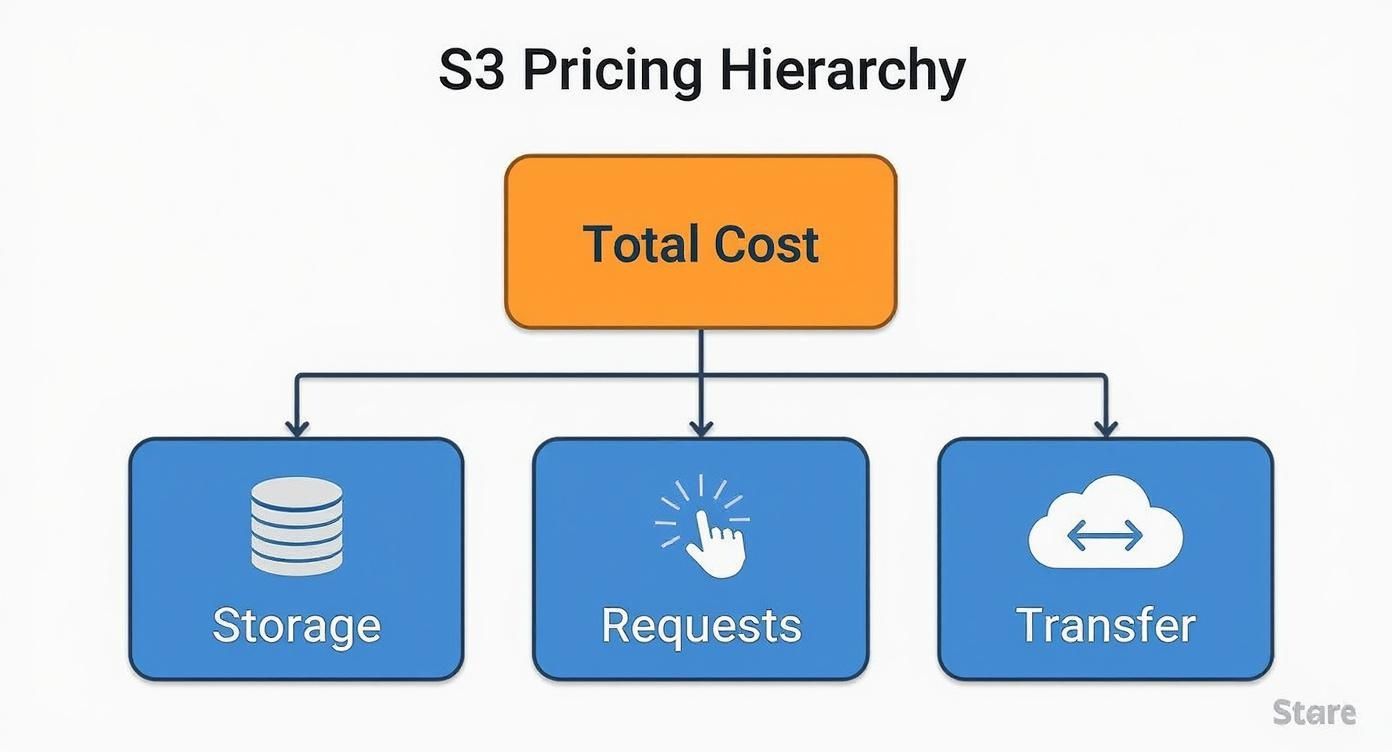

So, what are you actually paying for? Your Amazon S3 bill really boils down to three main activities. Each one contributes to your final bill in a different way, and grasping these is absolutely crucial if you want to optimize your spending.

Let's break down the main components that drive your expenses with a simple table.

Primary Drivers of Your Amazon S3 Bill

| Cost Component | What It Means | Simple Analogy |

|---|---|---|

| Storage Volume | This is the amount of space your data occupies, measured in gigabytes (GB) per month. | Think of it like renting a physical storage unit. The more boxes you store, the more you pay for the space. |

| Requests & Retrievals | Every interaction with your files, uploading, downloading, or even listing them, counts as a request. | This is like paying a small fee every time you visit your storage unit to put something in (a PUT request) or take something out (a GET request). |

| Data Transfer Out | This is the cost to move your data out of AWS data centers and onto the public internet. | It’s like paying a delivery fee to have items from your storage unit shipped to another location. Moving things in is free, but moving them out costs you. |

Each of these elements plays a part in your final bill. If you have an application that’s constantly reading and writing small files, your Requests & Retrievals costs could easily outpace your storage costs, even if the total data volume is low.

Understanding these three pillars: storage, requests, and data transfer, is the key to unlocking your S3 spending. Focusing only on storage volume is a common mistake that can lead you to overlook major savings opportunities in the other two areas.

The Three Pillars of S3 Pricing

To really get a handle on your Amazon S3 storage costs, you need to understand the three core components that show up on your bill every month. Think of them as the three legs of a stool: your total cost rests on Storage, Requests, and Data Transfer. If you ignore even one of these, you can get hit with some surprising charges. But once you see how they work together, you can start making smarter decisions to lower your bill.

Each "pillar" represents a different way you use S3. Figuring out how much you’re spending on each one is the first step toward building a cloud storage strategy that doesn't break the bank. Let’s break them down with some real-world examples to see exactly where the money goes.

Pillar 1: Storage Volume and Tiers

The most obvious cost is for the storage itself. This is what you pay for the sheer amount of data you're keeping in your S3 buckets, usually measured in gigabytes (GB) per month. You can think of it as the rent you pay for the digital shelf space your files are taking up.

But not all shelf space has the same price tag. A huge factor in your storage cost is the S3 storage class you pick. Amazon has several different tiers built for different needs, and the price per gigabyte changes dramatically from one to the next.

For example, data you need to access instantly and often, like images for your website or active project files, belongs in a higher-cost tier like S3 Standard. On the other hand, data you rarely touch, like old legal documents or long-term backups, can be moved to a much cheaper tier like S3 Glacier Deep Archive for a tiny fraction of the cost.

- S3 Standard: Costs about $0.023 per GB for your first 50 TB. This is your go-to for frequently accessed data.

- S3 Glacier Deep Archive: Can be as cheap as $0.00099 per GB. It's perfect for data you need to keep but almost never look at.

Choosing the right storage class is easily the most effective way to control this part of your bill. Paying for high-performance storage for data that just sits there is one of the most common ways businesses accidentally overspend on S3.

Pillar 2: Requests and Data Retrievals

The second pillar is all about requests and data retrievals. Every single action you or your applications perform on your objects comes with a tiny, individual cost. While the fee for one request is minuscule, they can add up to a serious number, especially if you have an application making millions of operations.

Imagine you're running a photo-sharing app. Every time a user uploads a photo, that’s a PUT request. When they browse a gallery, your app makes LIST requests to see what files are in the folder and GET requests to actually display the images.

Each of these tiny actions shows up on your bill. For an app with thousands of users constantly uploading, viewing, and deleting files, these request costs can sometimes grow to be even larger than your actual storage costs. It's a classic "gotcha" if you're not watching it.

Different types of requests have different prices, which also change depending on the storage class. For S3 Standard, uploading an object (PUT, COPY, POST) or listing a bucket’s contents (LIST) usually costs around $0.005 per 1,000 requests. Fetching data (GET) is a bit cheaper, but it’s still an action you pay for.

Pillar 3: Data Transfer Costs

Finally, we have the third pillar: data transfer. This is often the most misunderstood and surprising part of an Amazon S3 bill. You get charged whenever you move data out of an AWS region and onto the public internet.

Here’s the most important thing to remember:

- Data Transfer IN: Moving data into S3 from anywhere? Almost always free.

- Data Transfer OUT: Moving data out of S3 to the internet? That costs money.

- Internal Transfers: Moving data between S3 buckets in the same AWS region is free.

This "data egress" fee is why a website with a lot of download traffic can rack up a huge S3 bill, even if it's only storing a small amount of data. Let's say you host a 1 GB video file that gets downloaded 1,000 times. You’re not paying for 1 GB of storage; you're paying to transfer 1,000 GB of data out to the internet.

Your first 100 GB of data transfer out each month is free. After that, prices start around $0.09 per GB.

Once you understand these three pillars, you can look at your S3 bill with confidence, know exactly where your money is going, and find the best places to start optimizing.

How to Choose the Right S3 Storage Class

Picking the right storage class is the single most powerful lever you can pull to manage your Amazon S3 storage costs. Think of it like choosing the right kind of transportation for a job. You wouldn’t use a moving truck for a quick trip to the grocery store, and you definitely wouldn't use a sports car to haul furniture. Each S3 storage class is a different vehicle, built for a specific purpose at a specific price point.

The core idea here is simple: match how you access your data to the most cost-effective tier. Paying for high-performance, instant-access storage for data that you rarely touch is one of the most common and expensive mistakes people make. Once you understand the options, you can stop overpaying for performance you don’t need and start optimizing that S3 bill.

This infographic breaks down the main components that make up your total S3 costs, showing how storage, requests, and data transfer all fit together.

As you can see, your storage class choice directly impacts the largest piece of the puzzle, but it also has a ripple effect on the costs tied to requests and data transfers.

For Frequently Accessed Data

When your data needs to be available at a moment’s notice for constant use, S3 Standard is the default, and often the best, choice. It’s designed for high performance and low latency, making it perfect for dynamic websites, content distribution, mobile apps, and big data analytics workloads.

While it’s the priciest option in terms of pure per-gigabyte storage, it has zero retrieval fees. This makes it incredibly cost-effective for data that is constantly being read and written.

For Unpredictable or Changing Access Patterns

But what about data where access patterns are all over the place or just plain unknown? This is exactly where S3 Intelligent-Tiering shines. This class acts like a smart assistant, automatically monitoring your data's usage and shuffling objects between different access tiers without you lifting a finger.

For instance, an object that hasn't been touched for 30 consecutive days will automatically move to an infrequent access tier, immediately lowering your storage cost. If that same object is needed later, Intelligent-Tiering zips it right back to the frequent access tier. This automation saves you from paying high rates for "cold" data while ensuring performance is there when you need it.

For Less Frequent Access

For data you don't access often but still need to retrieve quickly when you do, S3 Standard-Infrequent Access (S3 Standard-IA) is a fantastic fit. It offers the same high durability and throughput as S3 Standard but comes with a much lower per-GB storage price.

The trade-off? You pay a small per-GB fee every time you retrieve the data. This makes it perfect for long-term storage, backups, and disaster recovery files that you only need in specific situations. A similar option, S3 One Zone-IA, offers an even lower price by storing data in a single Availability Zone instead of multiple, making it a good choice for less critical, easily reproducible data.

If you're exploring other cloud storage options, you might also be interested in our deep dive into understanding Azure Blob Storage pricing.

For Long-Term Archiving

The S3 Glacier family is built for one thing: archiving massive amounts of data at an extremely low cost. These are your deep-storage solutions for data you have to keep for compliance, regulatory, or historical reasons but almost never expect to access.

There are three main flavors in the Glacier family:

- S3 Glacier Instant Retrieval: Offers the lowest-cost storage with millisecond retrieval, making it ideal for archives that might need immediate access once or twice a quarter.

- S3 Glacier Flexible Retrieval: A slightly cheaper option for archives where retrieval times of a few minutes to several hours are perfectly acceptable.

- S3 Glacier Deep Archive: This is the absolute cheapest storage available from AWS. With retrieval times of 12 hours or more, it’s designed for data that might not be touched for years on end.

By aligning your data's lifecycle with these storage classes, you can achieve significant savings. A common strategy is to use S3 Lifecycle Policies to automatically transition data from S3 Standard to S3 Standard-IA after 30 days, and then to S3 Glacier Deep Archive after a year.

To make this easier to visualize, here's a quick comparison of the most common storage classes.

S3 Storage Class Comparison

| Storage Class | Ideal Use Case | Typical Retrieval Time | Relative Cost |

|---|---|---|---|

| S3 Standard | Dynamic websites, content distribution, mobile apps, analytics | Milliseconds | Highest |

| S3 Intelligent-Tiering | Data with unknown, changing, or unpredictable access patterns | Milliseconds | Variable |

| S3 Standard-IA | Long-term storage, backups, disaster recovery files | Milliseconds | Lower |

| S3 One Zone-IA | Recreatable data, secondary backup copies | Milliseconds | Lower |

| S3 Glacier Instant | Archives needing immediate access (e.g., medical images, news media) | Milliseconds | Very Low |

| S3 Glacier Flexible | Archives where a retrieval time of minutes to hours is acceptable | Minutes to Hours | Very Low |

| S3 Glacier Deep Archive | Long-term data retention for compliance (7-10 years) that is rarely accessed | 12+ Hours | Lowest |

Choosing the right class comes down to understanding your data's access patterns and balancing storage costs against retrieval needs.

Amazon S3 has a storage class for pretty much any scenario. For instance, S3 Standard is priced at about $0.023 per GB for the first 50 TB/month in a major region like US-West (Oregon), perfect for active data. Meanwhile, the S3 Intelligent-Tiering class can deliver up to 68% lower storage costs for objects that go untouched for 90 days. You can read more about these powerful pricing structures and their global availability directly from AWS.

It's one thing to talk about storage classes in theory, but seeing them in action is what really drives home the massive financial impact of smart S3 management. To make this tangible, let's look at a fantastic, real-world case study from the online design platform, Canva.

Canva’s story is a practical blueprint for turning data analysis into multi-million dollar savings on Amazon S3.

It all started with a deep dive into their massive data footprint. They didn't just need to know what they were storing, but how that data was actually being used. This analysis led them to a huge discovery about their data access patterns.

Finding the 80/20 Rule in Their Data

Canva's investigation revealed a classic pattern. They found that a tiny fraction of their total data was driving the overwhelming majority of access requests.

Specifically, only about 10% of their stored data was in the high-performance S3 Standard class. But this small slice of data accounted for a whopping 60-70% of all access activity.

The rest of their data, the vast majority, was sitting in the Standard-Infrequent Access (Standard-IA) tier, basically collecting dust. This single insight was the key to unlocking major cost reductions.

This kind of split is incredibly common. Most businesses have a small, active dataset for daily operations, while the massive remainder is just older files, backups, and historical records that are rarely touched. Figuring out that split is the first step toward optimization.

Making the Strategic Jump to Glacier

Armed with this knowledge, Canva's team made a brilliant move. They saw huge volumes of data in the Standard-IA tier that were rarely, if ever, accessed but still needed to be retrieved quickly on the off chance they were needed.

Paying Standard-IA prices for that data was just burning money.

Their solution? They moved a huge chunk of this data to S3 Glacier Instant Retrieval. This storage class was the perfect fit, giving them the cheap archival pricing of the Glacier family but with the millisecond retrieval times their apps demanded.

This one strategic shift allowed Canva to slash its storage bill. By perfectly matching their data's access patterns to the right storage tier, the company saved millions of dollars every year.

You can get the full story on how Canva optimized its S3 usage for massive savings directly from the source. Their success is proof that truly understanding your own data is the most powerful tool you have for taming your Amazon S3 bill.

Actionable Strategies to Reduce Your S3 Bill

Alright, now that you know how the S3 meter runs, let's talk about how to slow it down. Just understanding the bill is one thing; taking control of it requires a deliberate game plan. Luckily, AWS gives you some powerful levers to pull, and you can start using them today to see a real difference.

We're talking about everything from simple digital spring cleaning to smart automation. By getting proactive, you can stop reacting to your S3 bill and start treating it like a finely tuned part of your operation.

Let's dive into the most effective ways to make that happen.

Automate Your Savings with S3 Lifecycle Policies

One of the best tools in your cost-cutting arsenal is S3 Lifecycle Policies. Think of them as an automated cleaning crew for your data. You just set up a few simple rules for your S3 buckets, and they automatically move or delete your files as they get older.

For example, you could create a rule that shifts objects from the more expensive S3 Standard class to S3 Standard-IA after 30 days of not being touched. After another 90 days, another rule could kick in, moving that same data to S3 Glacier Instant Retrieval for cheap, long-term archiving. You can even set a final rule to permanently delete data after a certain time, perfect for temporary log files that are useless after a few months.

Setting up a lifecycle policy is a classic "set it and forget it" strategy. You automate the flow of data into cheaper storage tiers, guaranteeing you're never overpaying for data nobody is accessing anymore.

Get a Clear View with AWS Cost Management Tools

You can't optimize what you can't see. AWS knows this, so they give you a couple of fantastic tools to get a crystal-clear view of where every penny is going. The two you absolutely need to know are AWS Cost Explorer and S3 Storage Lens.

- AWS Cost Explorer: This is your go-to for charts and reports that break down your spending over time. You can slice and dice your S3 costs by bucket, region, or even by tags to hunt down exactly which resources are costing you the most.

- S3 Storage Lens: This tool gives you a 30,000-foot view of all your object storage across your entire organization. It doesn't just show you data; it gives you solid recommendations, like pointing out buckets that are missing lifecycle policies or flagging incomplete multipart uploads that are quietly costing you money.

Making a habit of checking these tools will help you spot trends, catch weird spikes in spending, and find specific opportunities to save. Getting a handle on your spending is a cornerstone of any good cloud strategy, and these same principles apply beyond S3. You can learn more about general strategies for cloud cost optimisation to round out your knowledge.

Perform Some Essential S3 Housekeeping

Beyond the fancy automation and dashboards, a bit of old-fashioned housekeeping can make a huge dent in your bill. These are the easy-to-miss sources of waste that can silently add up month after month.

One of the biggest offenders is incomplete multipart uploads. When you upload a large file and the process gets interrupted, those partial chunks of data can just sit there in your bucket, taking up space and costing you money for absolutely nothing. Finding and deleting these fragments is a quick and easy win.

Another simple trick is to compress files before you upload them. For things like text files, logs, or JSON data, running them through a format like GZIP or BZIP2 can shrink their size by up to 90%. That translates directly to a 90% savings on storing that file. It's a small, proactive step that pays off forever.

Why S3 Pricing Has Remained So Stable and Predictable

When you're committing huge datasets to a cloud provider, the last thing you want are surprise price hikes. It’s a legitimate fear. But understanding the history of Amazon S3’s pricing should put your mind at ease and give you the confidence you need for long-term planning.

S3’s track record shows a consistent focus on driving costs down, not gouging customers. This isn't just a happy accident; it’s baked into how the service evolved from a simple flat-rate model to the tiered system we use today.

The Evolution from Flat Rates to Tiered Savings

When S3 first hit the scene back in 2006, its pricing was dead simple: a basic flat-rate model. But as the platform exploded in popularity, AWS saw an opportunity. They introduced tiered pricing to reward their biggest users and encourage even larger-scale adoption.

This history reveals a clear pattern: as AWS benefits from massive economies of scale, it passes those savings directly on to its customers. The trend has consistently been toward price reductions, not increases.

By 2009, we saw major price cuts for petabyte-scale storage. Fast forward to December 1, 2016, and S3 simplified its model again, with pay-as-you-go tiers dropping to between $0.023 and $0.021 per gigabyte. This evolution has made Amazon S3 storage costs incredibly predictable for businesses all over the world. If you want to dive deeper, you can explore the historical timeline and pricing adjustments of Amazon S3 to see the full progression for yourself.

The real takeaway from S3's pricing history is its trajectory. The platform has consistently become more affordable over time, showing a clear commitment to long-term value and cost predictability for its users.

Building Confidence for Long-Term Planning

This isn't just a fun history lesson; it's a critical factor for your business strategy. Knowing that AWS has a long-standing habit of cutting costs rather than raising them lets you plan for the future with a much higher degree of certainty.

For any business storing critical, large-scale data, that track record is everything. It assures you that S3 is not just a technically sound platform but also a financially stable one. This predictability means you can build your entire data strategy on S3 without constantly worrying about your budget getting blown up down the line. It's a reliable foundation you can actually grow on.

Frequently Asked Questions About S3 Costs

Digging into the details of Amazon S3 storage costs always brings up some specific questions. Getting clear, simple answers is the key to managing your cloud budget and dodging those surprise charges on your monthly bill.

Let's walk through some of the most common things people ask about S3 pricing so you can plan your storage strategy with confidence.

How Can I Estimate My Monthly S3 Bill?

The absolute best way to get a handle on your future S3 expenses is to use the official AWS Pricing Calculator. This isn't just some rough estimator; it's a detailed tool that lets you plug in your expected usage for all the things that actually drive your bill, like how much you'll store, how much data you'll transfer, and how many requests you think you'll make.

You can break it down by the specific S3 storage classes you plan to use, and it will spit out a surprisingly accurate monthly estimate. It’s a must-use tool for budgeting and for comparing different architectural choices before you're locked in.

What Are the Hidden Costs of S3?

While AWS isn't trying to "hide" anything, some charges can definitely catch you off guard if you're only looking at the per-gigabyte storage price. The usual suspects for unexpected costs are:

- Data Transfer Out: This is the big one. Moving data out of S3 to the internet is a major cost driver that a lot of people underestimate. Every time a user downloads a file, a webpage loads an image, or an API call pulls data from your bucket, it adds up.

- Requests: Those small

GET,PUT, andLISToperations seem harmless, but they each have a tiny fee. If you have an application making millions of these calls, those tiny fees can snowball into a significant line item on your bill. - Management Features: Using some of the more advanced tools also comes with its own price tag. Things like S3 Storage Lens for deep analytics or setting up automated lifecycle transitions have their own charges.

Getting visibility into all these moving parts is exactly what a good FinOps practice is for. If you're new to the concept, our guide on what FinOps is and how it helps control cloud costs is a great place to start.

What Is the Cheapest Way to Store Data in S3?

For long-term archival, data you need to keep but almost never touch, S3 Glacier Deep Archive is the hands-down cheapest option you'll find. The storage price is incredibly low, often coming in at less than a dollar per terabyte per month.

The catch? Retrieval time. Getting your data back from Glacier Deep Archive can take up to 12 hours. It's perfect for compliance backups or historical records, but it's definitely not for data you need for day-to-day operations.

Does Deleting Objects from S3 Cost Money?

Nope, the act of deleting an object itself is free. AWS doesn't charge you for DELETE requests.

But there's a crucial exception you need to know about. Certain storage classes, like S3 Standard-IA and the Glacier tiers, have a minimum storage duration, often 30 or 90 days. If you delete an object before that minimum time is up, you'll get hit with an early deletion fee, which is basically the cost of storing it for the remaining days.

Ready to stop paying for idle cloud resources? CLOUD TOGGLE provides a simple, powerful way to automate server shutdowns on AWS and Azure, cutting your cloud bill without complex configurations. Start your free 30-day trial and see how much you can save at https://cloudtoggle.com.