The cost of Amazon S3 isn't a simple, flat fee. It’s a flexible model that bills you based on exactly what you use. While you might hear that storing 1 TB of data in the S3 Standard tier costs around $23 per month, that figure only tells part of the story. It covers storage and nothing else, leaving out other critical charges like data transfer and requests.

Decoding Your First Amazon S3 Bill

Cracking open your first Amazon S3 bill can feel like trying to decipher a secret code. The final number you see is a blend of several different charges, not just a simple calculation of gigabytes stored. This multi-faceted approach is incredibly powerful because it means you only pay for what you actually use, but it can easily lead to confusion if you aren't familiar with the components.

Think of it like your home utility bill. You don't just pay a single fee for "power"; you're charged for different things that add up to the total:

- The amount of electricity you actually consume (this is like your stored data).

- A small fee each time you flip a light switch (these are the requests to access your data).

- An extra charge if you send that power to your neighbor's house (this is transferring data out of S3).

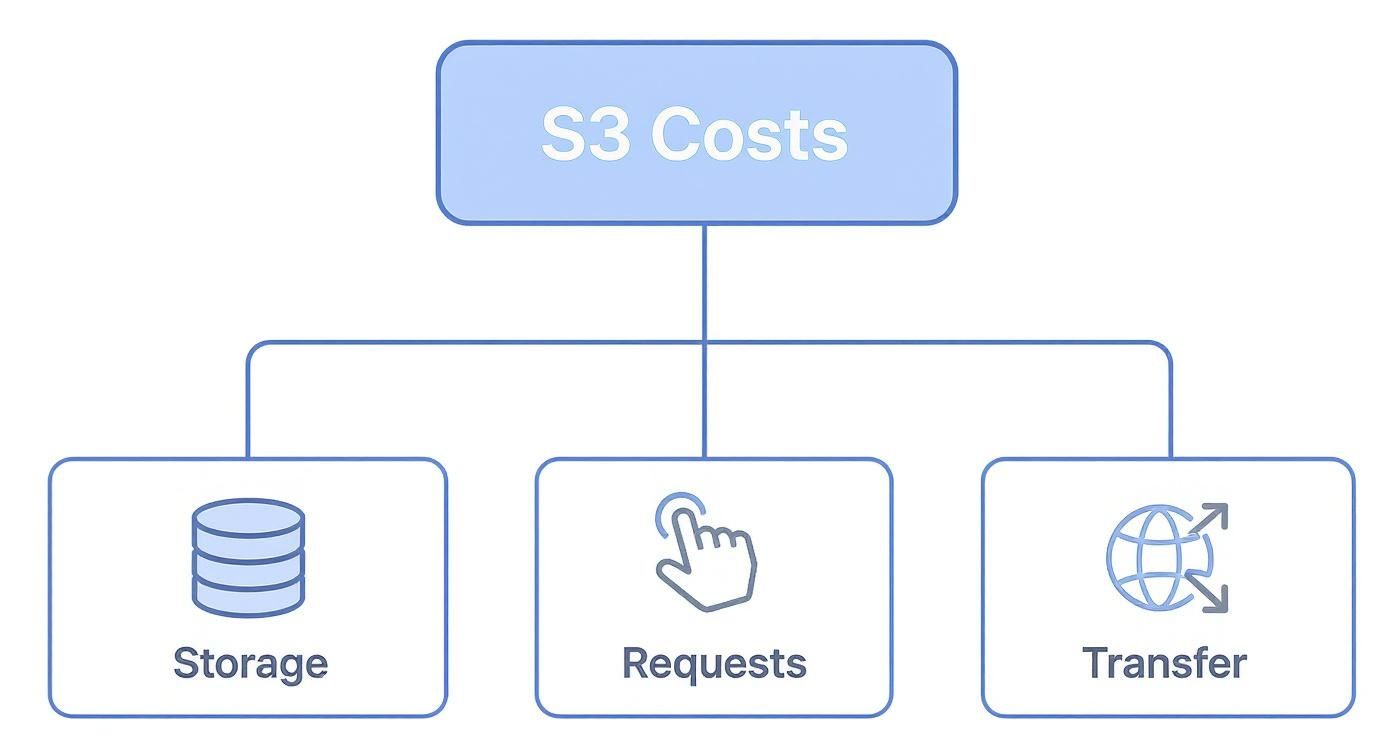

The Primary Cost Components

Each of these pieces has its own price tag, and how you interact with S3 directly shapes your monthly bill. For instance, a tiny file that gets accessed millions of times could end up costing more than a massive file that just sits there untouched. This dynamic is why understanding the breakdown is so critical for keeping costs under control.

This guide will pull back the curtain on each part of your bill, giving you the clarity you need to manage your cloud spending with confidence. Misunderstanding these components is one of the most common reasons for budget blowouts. For more on that, check out our detailed article on how to prevent AWS unexpected charges.

At its core, Amazon S3 pricing is built on a pay-as-you-go model. This means there are no minimum fees or setup charges. You simply pay for the storage you use, the requests you make, and the data you transfer.

To kick things off, let's get a high-level look at the main factors that will show up on your invoice. Getting comfortable with these categories is the first step toward mastering your S3 costs.

Primary Amazon S3 Cost Components at a Glance

Here’s a quick summary of the big-ticket items that contribute to your monthly bill.

| Cost Component | What It Covers | Typical Pricing Model |

|---|---|---|

| Storage | The physical space your data occupies, measured in gigabytes (GB). | Per GB per month |

| Requests & Retrievals | Actions performed on your data, like uploading (PUT) or downloading (GET). | Per 1,000 requests |

| Data Transfer | Moving data out of S3 to the internet or another AWS region. | Per GB transferred |

| Management & Analytics | Optional features like S3 Storage Lens or Inventory for monitoring. | Varies by feature |

Each of these plays a role, and in the next sections, we’ll dive deep into how each one works and what you can do to optimize it.

The Six Core Pillars of S3 Pricing

To really get a handle on your Amazon S3 bill, you have to look past the simple per-gigabyte storage price. Your final invoice is more like a restaurant bill. It’s not just the food, but the drinks, service fees, and extras that make up the total. S3 pricing works the same way, and we can break it down into six core pillars.

Getting familiar with these pillars is the first step to figuring out where your money is actually going. Each one plays a unique role, and small charges in one area can snowball surprisingly fast.

1. Storage

This is the most obvious one. Storage cost is the rent you pay for the space your data takes up on Amazon's servers. It’s calculated based on how many gigabytes (GB) you’re storing and for how long.

Think of S3 storage classes like different kinds of self-storage units. For stuff you use all the time, you'd pick a climate-controlled, easy-access unit that costs a bit more. For old family photos you rarely look at, a cheaper, deep-archive box in a less convenient spot makes more sense. S3 gives you that same range, from the instantly available S3 Standard all the way to the incredibly cheap S3 Glacier Deep Archive.

2. Requests and Data Retrievals

This pillar covers every action you take on your data. Every time you upload, copy, list, or delete a file, you're making a "request." AWS charges a tiny fee for these operations, usually billed per 1,000 requests. It’s like paying a small toll each time you use the storage highway.

- PUT, COPY, POST, LIST requests: These are actions that add or organize data. Uploading a new file is a classic PUT request.

- GET, SELECT, and all other requests: These involve reading or pulling your data. Downloading a file is a GET request.

For the archival storage classes like the Glacier tiers, there's also a specific charge for data retrievals. This is a separate fee you pay just to bring your data out of the deep freeze, on top of the normal request fee.

3. Data Transfer

Data transfer costs are often the biggest surprise on an S3 bill. These fees kick in when you move data out of an S3 bucket. The golden rule here is that transferring data into S3 from the internet is almost always free. The meter starts running when you move it out.

The most common culprit for a high S3 bill is Data Transfer OUT to the Internet. If you host a popular website with downloadable PDFs or images stored in S3, every single download by a user adds to this cost.

You'll also see charges for moving data between different AWS regions, for instance, copying files from a bucket in the U.S. to one in Europe. On the bright side, transferring data within the same AWS region is usually free.

4. Management and Replication

S3 isn't just a simple hard drive in the cloud; it has powerful features to manage and protect your data, and some of these have their own price tags. They’re optional, but for many businesses, they’re non-negotiable.

Here are a few key features in this category:

- S3 Replication: This feature is a lifesaver for disaster recovery. It automatically copies your data to another S3 bucket in the same or a different region. You pay for the storage in the second bucket, plus any cross-region data transfer fees.

- S3 Inventory, S3 Storage Class Analysis, and S3 Object Lock: These are tools that help you audit your storage, analyze usage patterns, and secure your data. They come with their own pricing models, often based on how many objects you’re tracking.

5. Analytics

As your data piles up, you'll want to understand what's in there without downloading everything. The analytics pillar covers S3 tools that help you analyze usage and trim costs.

The main service here is S3 Storage Lens, which gives you a high-level dashboard view of your storage activity across your entire organization. It has a great free tier, but the advanced metrics are paid. Another handy feature, S3 Select, lets you run SQL-like queries directly on your files, and you're billed based on how much data the query scans.

6. Processing

The final pillar covers services that transform your data right within S3, saving you the hassle of moving it to a separate server for processing.

A great example is S3 Object Lambda. This lets you attach your own code to S3 GET requests to modify data on the fly as it’s being retrieved. You could use it to automatically resize images for a mobile app or redact sensitive information from a document before a user downloads it. You pay for the compute time and the data processed by the Lambda function.

The sticker price for storage is just the beginning. For example, while S3 Standard in the US East region costs $0.023 per GB for your first 50 TB, your actual bill is shaped by how you use that data. GET, PUT, and DELETE requests each have per-request fees, and outbound data transfer is billed on top of that. Archival tiers like S3 Glacier Instant Retrieval slash storage costs but charge a premium for data retrievals.

This trade-off is exactly what companies like Canva have mastered, cutting their annual S3 costs by over $3 million simply by moving 90% of their data to lower-cost tiers. You can get a much deeper look at how S3 storage classes really impact your bill on nops.io.

Choosing the Right S3 Storage Class to Save Money

Picking the right storage class in Amazon S3 isn't just a technical choice; it's one of the most powerful levers you have for controlling your AWS bill. Think of S3 storage classes like different rooms in a massive warehouse. Some are premium, front-of-house spots for items you need instantly. Others are deep, secure vaults for things you rarely touch.

Paying for a premium spot to store old paperwork you'll never need is just bad business. The same logic applies here. Your goal is to match your data's access patterns to the most cost-effective storage class. This one decision impacts both your monthly storage fees and the cost to retrieve your data, so it’s a critical step.

This infographic breaks down the core pieces that make up your S3 bill.

As you can see, your total S3 spend is a mix of storage, requests, and data transfer fees. This is exactly why your storage class selection has such a big impact on the final number.

To make this easier, let's group the classes by how you'll likely use them.

For Frequently Accessed Data

When your data needs to be available at a moment’s notice for websites, applications, or real-time analytics, you have two main options built for speed and availability.

- S3 Standard: This is your default, do-it-all workhorse. It offers incredible durability, high availability, and low-latency performance. It's the perfect home for dynamic websites, mobile apps, and big data workloads where data is hit frequently and needs to be served up instantly.

- S3 Intelligent-Tiering: Think of this class as a smart assistant for your storage. It automatically shuffles your data between a frequent-access tier and a cheaper infrequent-access tier based on actual usage. If you have data with unpredictable or changing access patterns, this is a fantastic way to capture savings without lifting a finger. Just be aware there's a small monthly monitoring fee per object.

For Infrequently Accessed Data

What about data you need to keep but don't touch very often? This is where the infrequent access (IA) storage classes shine. They offer a much lower storage cost in exchange for a small fee when you do need to retrieve the data.

- S3 Standard-IA (Infrequent Access): This is the go-to for long-term storage, backups, and disaster recovery files that you don't access regularly but need back quickly when you do. It has the same high durability as S3 Standard but with a friendlier per-gigabyte price.

- S3 One Zone-IA: This option is even cheaper than Standard-IA, but there's a catch: it stores data in a single AWS Availability Zone. This makes it a great choice for data you can easily reproduce or for secondary backup copies where you're willing to trade multi-zone resilience for a 20% cost reduction.

A common mistake is just leaving everything in S3 Standard. By simply analyzing which data gets accessed less, you can shift it to an IA class and immediately cut your storage costs without hurting performance for the data that actually needs it.

For Archival and Long-Term Retention

For data you're required to keep for compliance, legal, or regulatory reasons but will almost never access, the S3 Glacier tiers offer the lowest storage costs you can find.

- S3 Glacier Instant Retrieval: Designed for archive data that still needs immediate access. This class gives you retrieval in milliseconds, just like S3 Standard-IA, but with an even lower storage price tag.

- S3 Glacier Flexible Retrieval: This is a fantastic low-cost option for archives where retrieval times of a few minutes to several hours are perfectly fine. You can even pay more for expedited retrievals if you're in a pinch.

- S3 Glacier Deep Archive: Welcome to Amazon's cheapest storage. It's built for preserving data for 7-10 years or more. Retrieving data can take up to 12 hours, making it the perfect fit for compliance archives you hope you never have to touch again.

To help you visualize the trade-offs, here’s a quick comparison of the main storage classes.

Amazon S3 Storage Class Comparison

| Storage Class | Ideal Use Case | Monthly Storage Cost (per GB) | Data Retrieval Cost | Key Feature |

|---|---|---|---|---|

| S3 Standard | Dynamic websites, mobile apps, active analytics | Highest | Low | High performance, low latency, default choice |

| S3 Intelligent-Tiering | Data with unknown or changing access patterns | Varies (Automatic) | Low | Automatic cost savings by moving data between tiers |

| S3 Standard-IA | Long-term backups, disaster recovery | Low | Moderate | Lower cost for infrequently accessed data |

| S3 One Zone-IA | Secondary backups, reproducible data | Lower | Moderate | 20% cheaper than Standard-IA, single AZ storage |

| S3 Glacier Instant | Archives needing millisecond access | Very Low | Moderate | Instant retrieval for archived data |

| S3 Glacier Flexible | Archives with flexible retrieval times (minutes/hours) | Very Low | Varies (by speed) | Configurable retrieval times, balances cost & speed |

| S3 Glacier Deep Archive | Long-term compliance archives (7-10+ years) | Lowest | High (slow) | Lowest-cost storage, up to 12-hour retrieval time |

Choosing the right class comes down to understanding your data's lifecycle. By aligning your storage choice with how often you actually need to access your files, you can dramatically reduce your S3 spending without compromising on performance or availability where it counts.

Practical Strategies to Optimize Your S3 Bill

Knowing how S3 pricing works is half the battle. Now it's time to actually take control of your cost of Amazon S3 and cut that monthly bill down to size.

The good news? AWS gives you some incredibly powerful, and often automated, tools to make it happen. These aren't about cutting corners; they're about working smarter with S3. Moving from a passive "store everything and forget it" approach to an active "store everything intelligently" mindset can unlock huge savings.

Use S3 Lifecycle Policies for Automatic Savings

One of the most effective set-it-and-forget-it strategies is using S3 Lifecycle policies. Think of these as simple rules you create to automatically shuffle your data to cheaper storage as it gets older.

For example, you can set a policy to move data from the main S3 Standard tier to S3 Standard-IA after 30 days. Then, after 180 days, you can have it archived to S3 Glacier Deep Archive. It's the perfect solution for data that's no longer needed instantly but has to be kept for compliance or historical records. You define the timeline, and S3 handles the rest.

Embrace S3 Intelligent-Tiering for Unpredictable Data

What about data where you have no idea how often it will be accessed? For those workloads with unpredictable or shifting access patterns, S3 Intelligent-Tiering is a lifesaver.

This storage class acts like a smart assistant, automatically moving your files between frequent and infrequent access tiers based on real usage. You get the cost savings without any manual work or performance hits. There’s a small monthly monitoring fee per object, but for large, dynamic datasets, the savings almost always make it a clear win.

S3 pricing has evolved significantly from its early flat-rate model. AWS’s strategy of frequent, granular price cuts driven by economies of scale and new features like Intelligent-Tiering has made S3 a cornerstone of modern cloud infrastructure. Today, S3 Standard storage costs are among the industry's lowest, enabling businesses of all sizes to scale without worrying about prohibitive expenses. Discover more about the evolution of AWS pricing on hidekazu-konishi.com.

Gain Visibility with S3 Storage Lens

You can't optimize what you can't see. S3 Storage Lens is your command center, giving you a complete, organization-wide view of your object storage. Its interactive dashboard serves up insights that help you spot cost-saving opportunities and tighten up data protection.

Use it to catch trends like a growing number of incomplete multipart uploads or to pinpoint which buckets are perfect candidates for a new lifecycle policy. For more great tools to keep your cloud spend in check, check out our guide on the top 6 AWS cost management tools.

Implement Other Essential Cost-Saving Habits

Beyond the big automation tools, a few simple habits can make a real dent in your S3 bill.

- Clean Up Incomplete Multipart Uploads: When a large file upload fails, it can leave orphaned parts behind that you’re still paying for. Set up a lifecycle policy to automatically delete these fragments and stop paying for useless data.

- Compress Files Before Uploading: Smaller files cost less to store. It’s that simple. Compressing data with formats like Gzip or Bzip2 before it even hits S3 can shrink your storage footprint and your bill.

- Minimize Cross-Region Data Transfers: Data transfer fees are a notorious hidden cost. Whenever you can, keep your S3 buckets and the EC2 instances that access them in the same AWS region to get those transfers for free.

How S3 Pricing Evolved and What It Means for You

To really get a handle on S3 costs today, it helps to rewind the clock. Back when Amazon S3 first hit the scene in 2006, pricing was dead simple: a single, flat rate. But as cloud computing took off and data needs got a whole lot more complicated, the pricing model had to keep up.

This evolution wasn't about making things confusing just for the sake of it. It was a direct reflection of what businesses actually needed. As AWS grew to a massive scale, they consistently passed those savings on to customers. This led to the sophisticated, multi-layered system we see today, built to handle pretty much any workload you can throw at it.

From Flat Rates to Tiered Savings

In the early days, you paid one price for storage, period. But as S3’s usage exploded, it became obvious that not all data is the same. Some files are mission-critical and accessed constantly, while others are just gathering dust in an archive for compliance reasons.

This insight sparked the creation of different storage classes and pricing tiers. It was a game-changer, allowing companies to pay far less for data that didn't need to be at their fingertips every second. The introduction of features like S3 Intelligent-Tiering was another major milestone, giving users a hands-off way to optimize costs by letting AWS automatically shuffle data to the cheapest tier based on how it's used.

A History of Falling Prices

One of the most remarkable things about S3 is its price history. Since launching in March 2006, Amazon has never once raised its base storage prices. In fact, AWS has announced more than 60 public price cuts across its services, and S3 has often been front and center.

This constant deflation means that while the model seems complex, it’s really built on a foundation of delivering more value over time. For example, a huge price drop in 2009 made petabyte-scale storage dramatically cheaper. Today’s Standard storage rates, hovering around $0.021 to $0.023 per GB, are a tiny fraction of what they used to be. For a closer look at this trend, you can find a great analysis of AWS pricing over time.

Understanding this history should give you confidence. It shows that even though the number of options has grown, the core idea is the same: you get more control and more value, not less. The complexity is there to give you more ways to save.

What This Evolution Means for You Today

This trip down memory lane matters because it explains why the system looks the way it does. It wasn’t engineered to be a confusing maze; it was built to offer incredible flexibility and savings at a global scale.

Here's the takeaway:

- You Have More Control: The different storage classes put you in the driver's seat. You can perfectly match your costs to how you access your data.

- Automation Drives Savings: Tools like S3 Intelligent-Tiering and Lifecycle policies are the direct result of this evolution. They exist to help you save money without having to constantly manage things by hand.

- Costs Are Predictably Low: The consistent history of price reductions means you can build on S3 knowing the foundation is stable and costs are more likely to go down than up.

Ultimately, mastering S3 costs isn't about fighting a complicated system. It's about taking advantage of a mature, powerful platform designed from the ground up to give you more bang for your buck as technology improves.

Your Path to S3 Cost Mastery

As we've seen, getting a handle on your Amazon S3 costs means looking well beyond a simple per-gigabyte price tag. If you want to achieve real cost control, you have to think about your entire cloud storage strategy, not just one number on a price sheet.

We’ve walked through the big three pricing pillars: storage, requests, and data transfer. We've also highlighted just how critical it is to match your data's workload to the right storage class. Armed with the strategies we covered, you can start proactively cutting down your bill instead of just reacting to it at the end of the month.

The key takeaway is this: Mastering your S3 costs isn’t a one-and-done fix. It's an ongoing cycle of monitoring your usage, making smart adjustments, and constantly looking for ways to optimize.

Your Next Steps to Savings

The journey to cost mastery is continuous, but you now have a solid roadmap to follow. The next steps are surprisingly straightforward and completely within your control.

- Review Your Usage: Take what you've learned here and plug your numbers into the official AWS Pricing Calculator. This will give you a much more realistic picture of your expected spending.

- Implement Lifecycle Policies: This is one of the most powerful "set it and forget it" optimizations out there. Automate the process of shifting data to cheaper storage tiers as it ages.

- Leverage AWS Tools: Make S3 Storage Lens a regular part of your workflow. It gives you incredible visibility into how you're using storage and will help you spot new savings opportunities you might have missed.

A FinOps Mindset for S3

Ultimately, managing S3 costs effectively is a huge piece of a much bigger puzzle: your overall cloud financial strategy. This approach, often called FinOps, is all about bringing financial accountability to the flexible, pay-as-you-go nature of the cloud. If you want to dive deeper into this crucial practice, check out our guide on what is FinOps.

By embracing these principles, you can make sure your cloud budget is always working for you, not against you. You’re now equipped to make informed decisions that turn S3 from a simple storage service into a powerful and cost-effective asset for your business.

Frequently Asked Questions About S3 Costs

Even once you get the hang of the main pricing pillars, you'll run into practical questions when you start managing your Amazon S3 costs day-to-day. Let's tackle some of the most common points of confusion to help you dodge those billing surprises and get your spending under control. These are the little details that often make the difference between a predictable bill and a very expensive month.

Getting these questions answered is key to putting S3 cost management principles into practice.

How Can I Monitor My S3 Costs in Real Time?

Waiting for the end-of-month bill to land is a recipe for disaster. The good news is that AWS gives you some powerful tools to track your spending as it happens, so you can react quickly if you see an unexpected cost spike.

Your first stop should be AWS Cost Explorer. It’s a great tool for visualizing your spending over time. You can filter everything by service, letting you zero in on just your S3 expenses. This makes it easy to see what’s really driving your bill, whether it’s data transfer, storage, or requests, and spot trends before they become problems.

For a more hands-on approach, you need to set up AWS Budgets. This is your early-warning system.

- First, create a budget that tracks only your S3 usage.

- Next, set a threshold, say, 80% of what you expect to spend in a month.

- Finally, configure an alert that shoots you an email or an SNS notification the moment you cross that line.

This simple setup takes just a few minutes but ensures you’re never caught off guard by a runaway bill again.

Is S3 Intelligent Tiering Always the Best Choice?

S3 Intelligent-Tiering is a fantastic feature for automating cost savings, but it's not a silver bullet for every single use case. It truly shines when you're dealing with data that has unknown or completely unpredictable access patterns. If you just don't know how often files will be needed, Intelligent-Tiering is a lifesaver.

But what if your access patterns are crystal clear? For instance, if you know for a fact that a dataset will be hit hard for 30 days and then sit untouched in an archive, a manual lifecycle policy might actually be cheaper.

The catch with S3 Intelligent-Tiering is the small, per-object monitoring and automation fee. If you have millions of tiny files that you know are destined for long-term archival, that fee can add up and sometimes cost more than the storage savings you get from the automatic tiering.

The bottom line? Always analyze your data's lifecycle. If it's predictable, a manual lifecycle policy gives you more precise control. If its access pattern is chaotic or a total mystery, Intelligent-Tiering is almost certainly your best bet.

What Is the Biggest Hidden Cost in Amazon S3?

Without a doubt, the most common "hidden" cost that blindsides new S3 users is data transfer out to the internet. So many people carefully budget for their storage needs but completely forget to account for the cost of users, customers, or applications actually downloading that data.

This charge is sneaky because data transfer into S3 is free, which can create a false sense of security. But if you're hosting a popular website, serving up video files, or offering software downloads, every single gigabyte your users pull from your S3 buckets adds to your bill. This cost scales directly with your traffic, meaning a single viral piece of content can cause your bill to skyrocket overnight.

Ready to stop guessing and start saving? CLOUD TOGGLE gives you precise control over your cloud resources, letting you automatically shut down idle servers to cut waste from your AWS and Azure bills. Start your free trial and see how much you can save at https://cloudtoggle.com.